For each story I create, I like to include a unique protagonist. Depending on the context and the most communicative visual style, I make the figure as a clay model or simple drawing. Once the protagonist is complete, I create the rest of the characters in the story using a similar visual style.

By training an AI model, I can create my figures once and then use them in each scene of this story and any other pieces I create for the same client. The trained model is part of the client’s brand.

However, this is not as straightforward as the AI world claims.

Dreambooth in Stable Diffusion

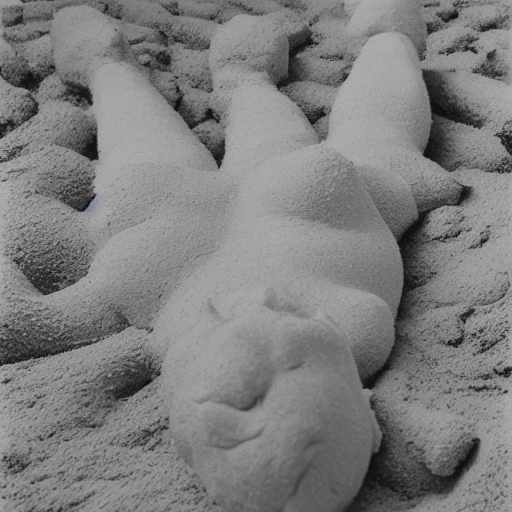

This clay figure lying down is a craniosacral therapy patient who starts in xxxx Craniosacral Therapy Workshops.

The course visuals require her to be viewed lying down from the side and from above her head and feet, to demonstrate how a craniosacral therapist performs their treatments.

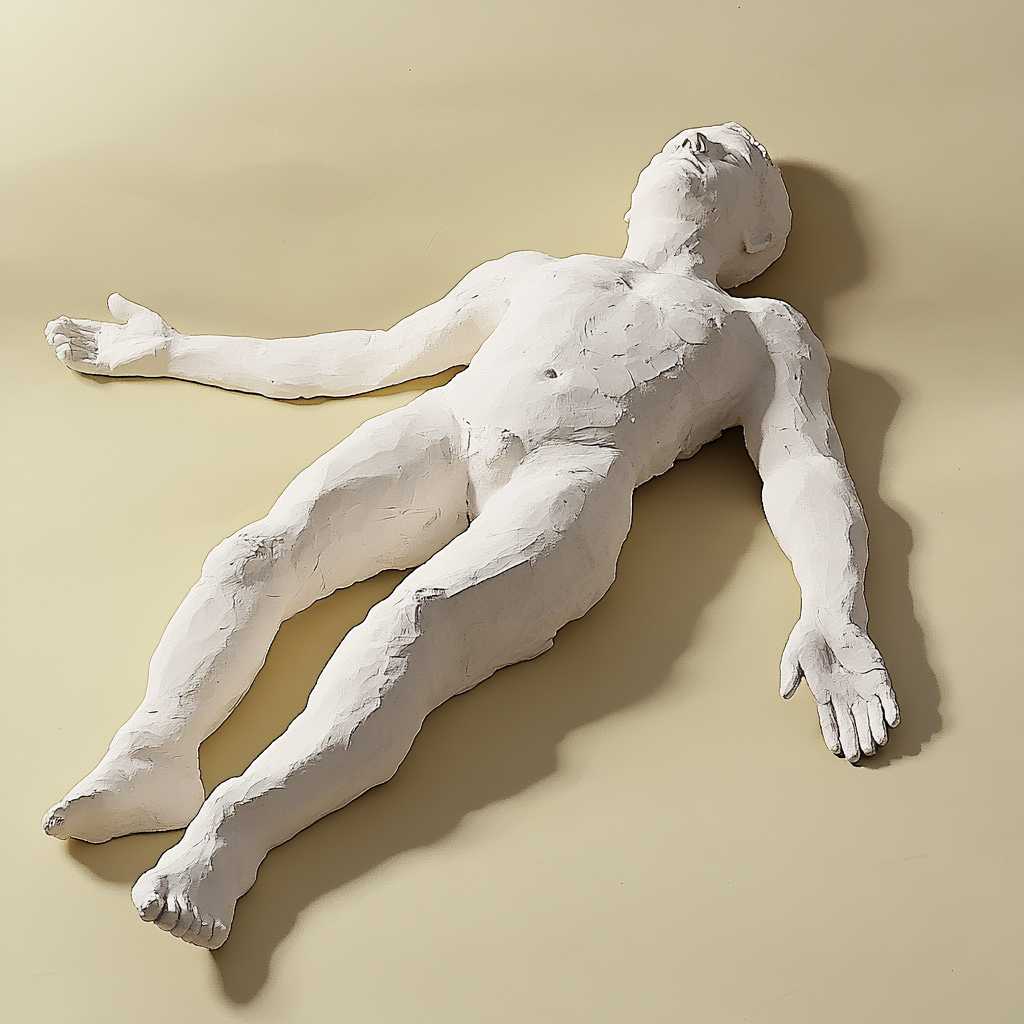

This is the initial model I created in my studio.

I photographed her from various angles and then fed the images into Google’s Dream…. using ..

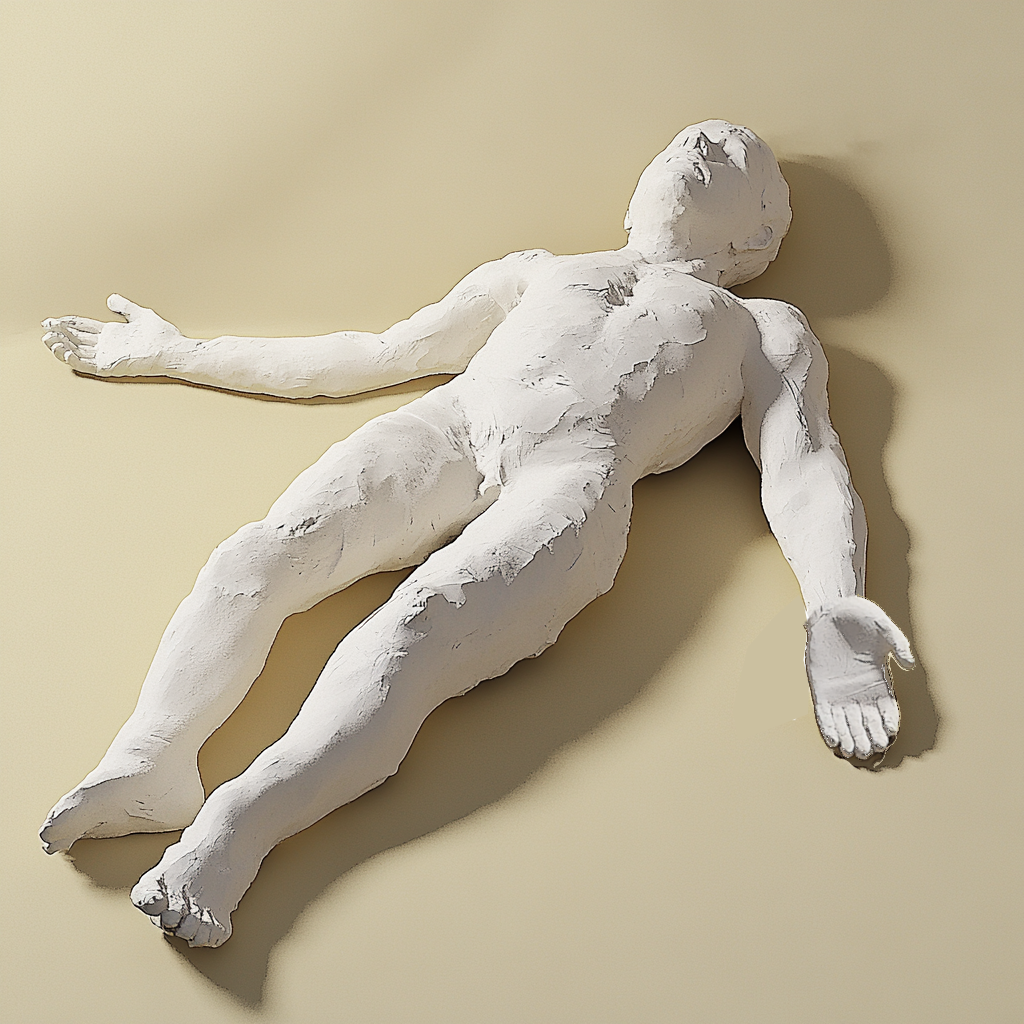

The results are entertaining, but useless. Here’s a few examples, created by prompting the model to create an image of my figure lying down and viewed from the side. Clearly, this prompt makes no sense to the model – all it’s given me are distorted versions of my original photos.

Cookie dough feet and some extra limbs:

Next, I repeated the process using a different model and asked for my figure to be shown lying in a corn field.

Clearly, this needs more work and more research.

Midjourney image-to-image

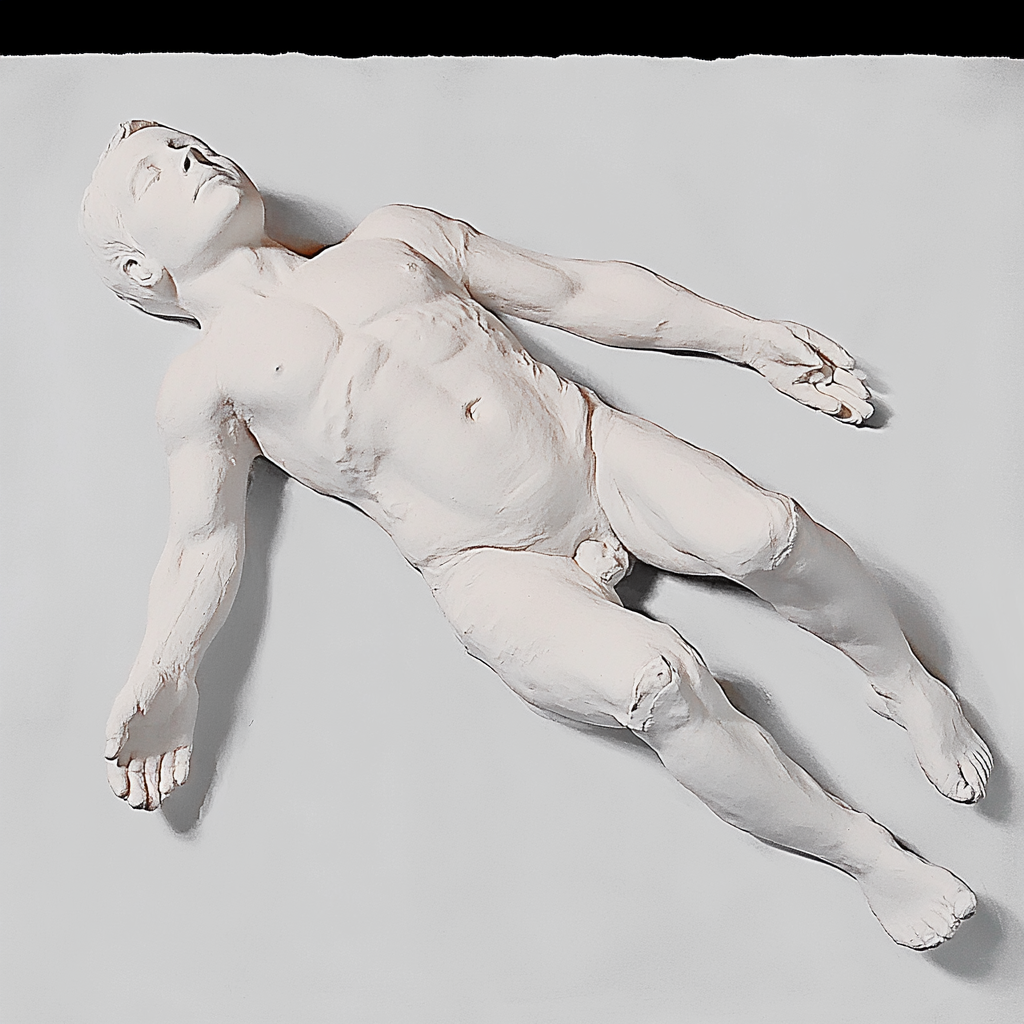

Next, I moved over to MidJourney and asked it to create some images using one of my photos. Using one image and this prompt,

“Create an accurate pencil sketch of this plaster model lying on a plain pale yellow background, the figure has straight legs, no deformities”

I created some images that I can use. However, this doesn’t solve my problem because I need to be create one character that can star in every scene of my story, and that I can use to render other characters.

The figure’s left hand was attached the wrong way round so I’ve made a quick and dirty edit to fix it on the first image.